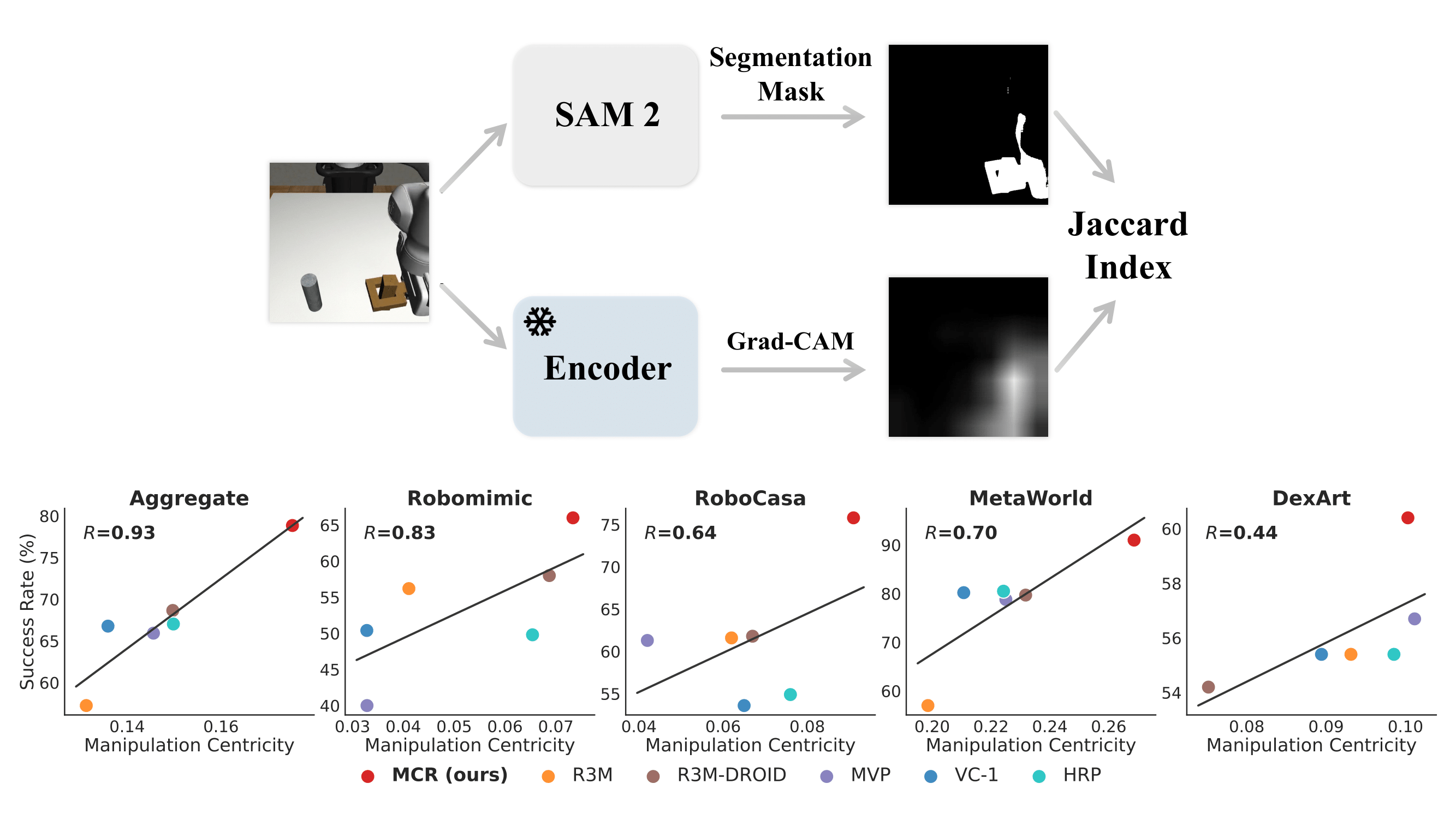

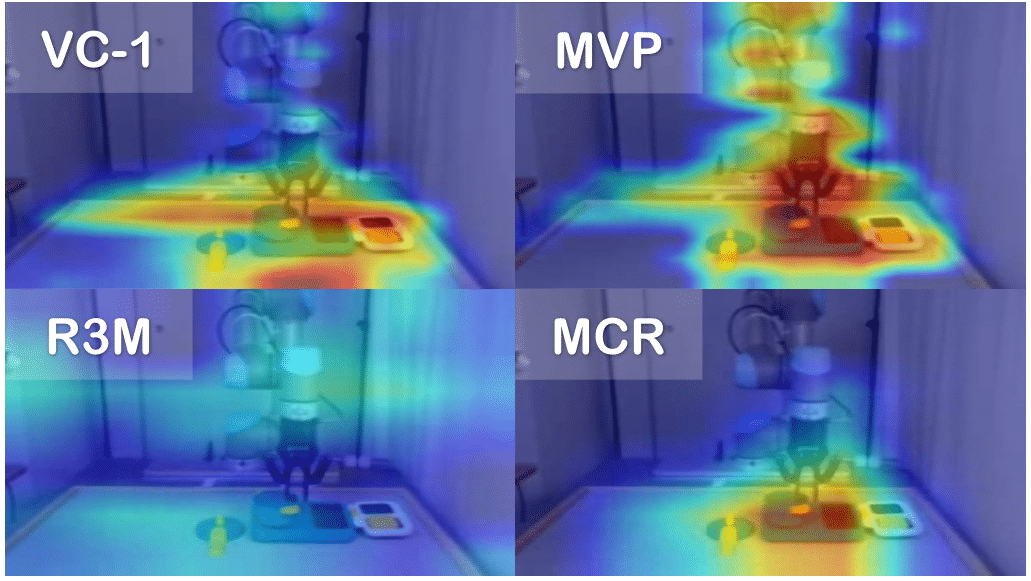

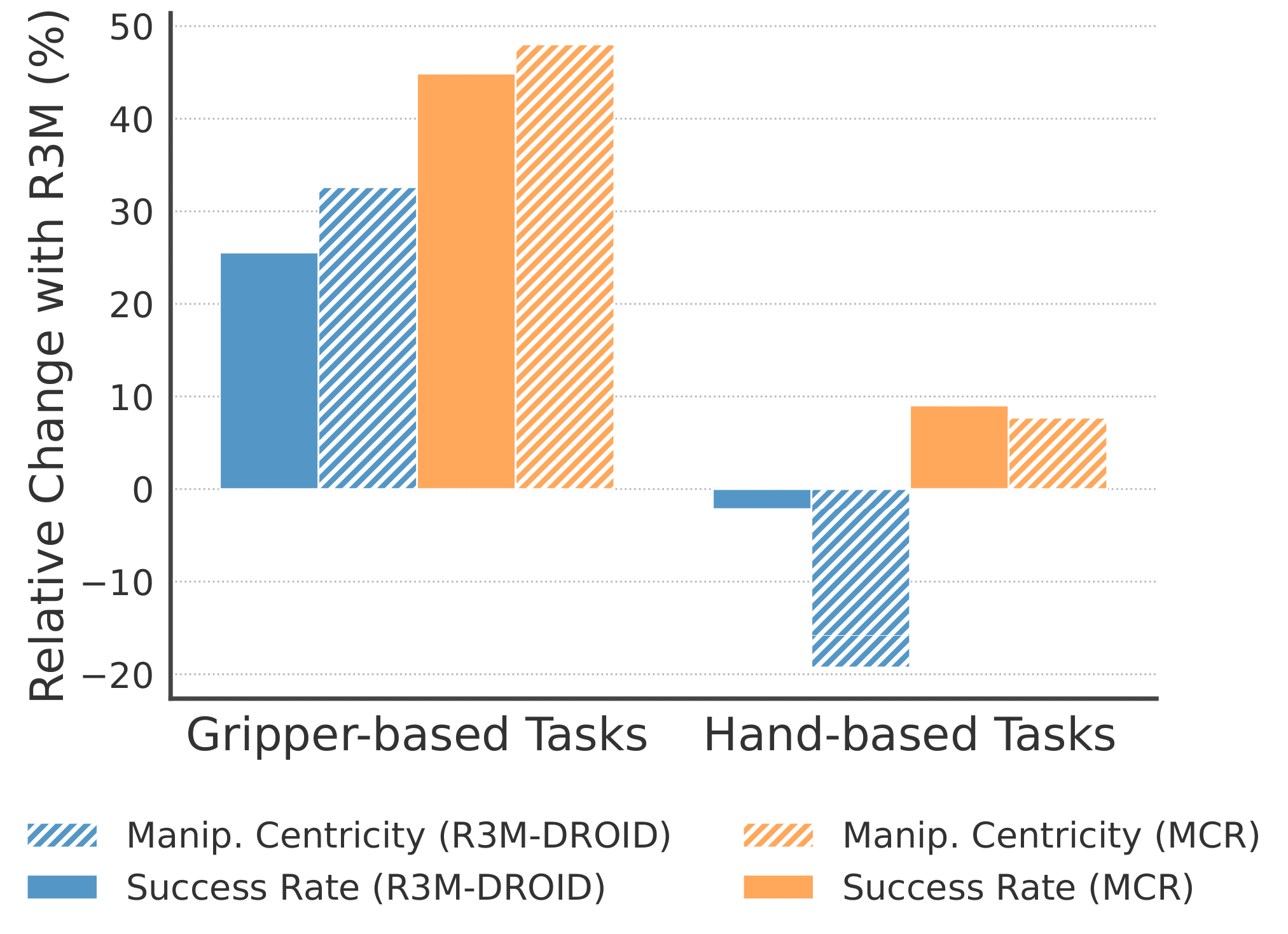

Through analyzing feature similarities between Grad-CAM visualizations and SAM2-identified ground truth regions, Manipulation Centricity quantifies a representation's focus on task-relevant areas, predicting downstream performance.

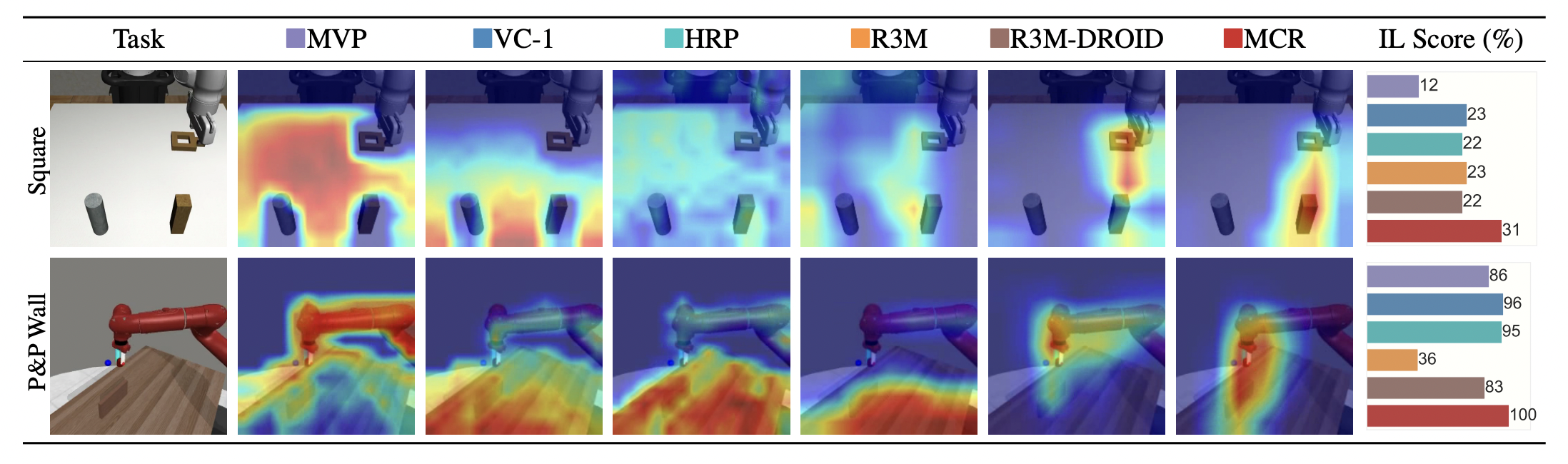

Grad-CAM visualization for the Square task from Robomimic and the Pick Place Wall task from MetaWorld

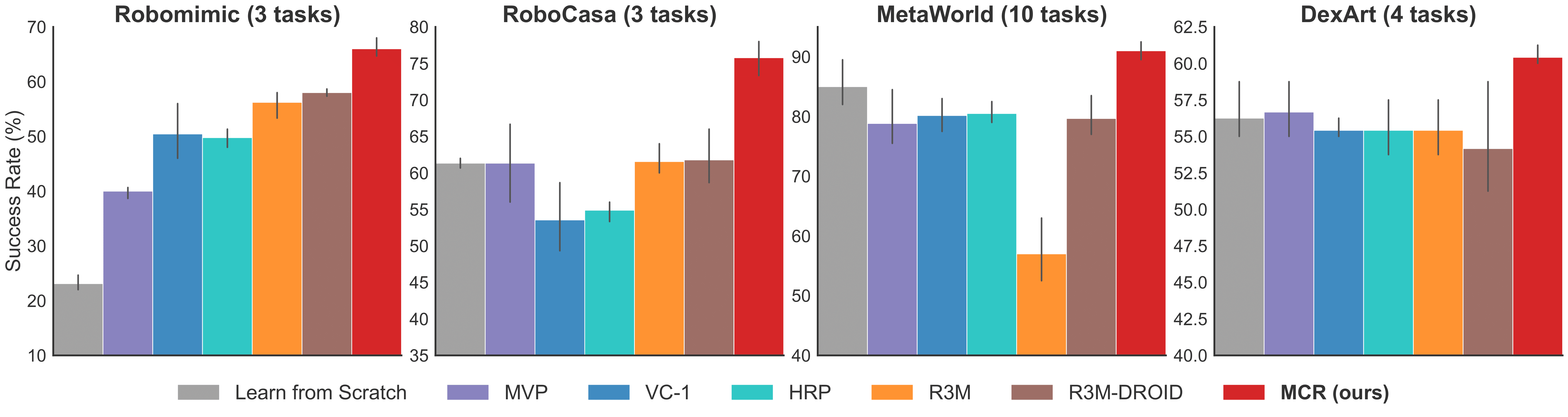

4 Domains: MetaWorld, DexArt, Robomimic, RoboCasa; 20 Tasks

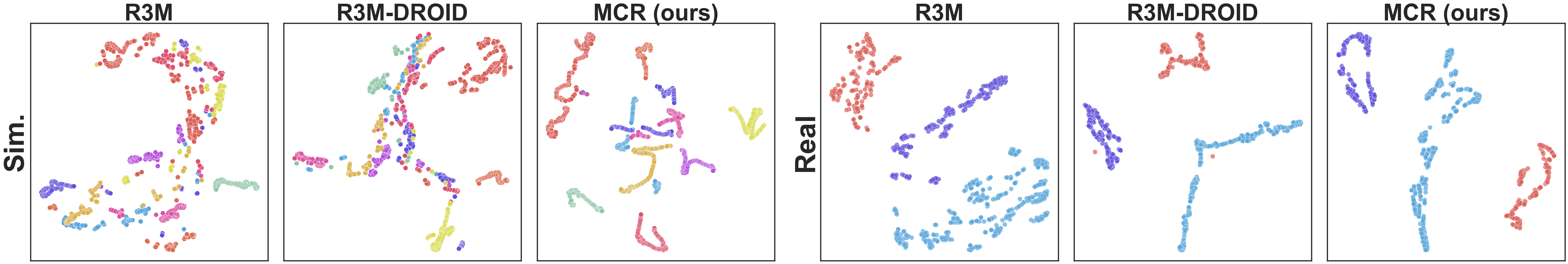

We do t-SNE visualization on 10 simulation tasks from MetaWorld and 3 real robot tasks. Each dot represents an image frame and each color indicates a task. The results demonstrate that (1) our representation has the best clustering ability and (2) robot data is helpful to robotic representation.

@article{jiang2024robots,

title={Robots Pre-Train Robots: Manipulation-Centric Robotic Representation from Large-Scale Robot Datasets},

author={Jiang, Guangqi and Sun, Yifei and Huang, Tao and Li, Huanyu and Liang, Yongyuan and Xu, Huazhe},

journal={arXiv preprint arXiv:2410.22325},

year={2024}

}